So, you're ready to deploy your containerized application on AWS. You've heard about Amazon Elastic Container Service (ECS), AWS's powerful native container orchestrator, and it seems like the right tool for the job. But then you hit a fork in the road: should you use the EC2 launch type or the Fargate launch type? One offers deep control but means managing servers, while the other promises serverless simplicity but with less direct influence over the underlying infrastructure. What's the real difference, and which one is right for your application?

This post will break down the Fargate vs ECS EC2 launch types, compare them head-to-head across key areas, explore ideal use cases, and even show some code differences. You'll have a clearer picture to help you choose your container launchpad on AWS by the end.

Quick Intro to ECS: The Basics

Before diving into the launch types and comparing Fargate vs. EC2, let's quickly cover what Amazon Elastic Container Service (ECS) actually is. At its core, ECS is a fully managed container orchestration service designed to make deploying, managing, and scaling containerized applications on AWS simpler. It integrates deeply with other AWS services like Elastic Load Balancing, IAM, and VPC, providing a cohesive environment for your applications.

Think of ECS having a few key building blocks:

- Cluster: This is a logical grouping of the compute capacity where your containers run. Importantly, a cluster can contain a mix of capacity provided by EC2 instances and Fargate.

- Task Definition: This is the blueprint or recipe for your application. It's a JSON file specifying details like which Docker images to use, CPU and memory requirements, networking settings, and data volumes.

- Task: A running instance of your Task Definition within a cluster. If the Task Definition is the recipe, the Task is the cake you baked from it.

- Service: This component ensures that a specified number of Tasks are continuously running and healthy. If a Task fails, the ECS Service automatically replaces it, keeping your application available.

When you run tasks within an ECS cluster, you need to provide the underlying compute power. This brings us to the two main "flavors" or launch types for providing that capacity: EC2 vs Fargate.

Meet the Contenders: EC2 vs. Fargate Explained

Let's properly introduce the two options.

A. ECS on EC2: The Hands-On Approach

With the EC2 launch type, your containers run on a cluster composed of Amazon Elastic Compute Cloud (EC2) instances that reside within your AWS account. The key thing here is that you are responsible for managing these underlying EC2 instances.

This responsibility includes:

- Provisioning: Selecting the right EC2 instance types (e.g., general purpose, compute-optimized), sizes, and operating system images (AMIs). Using the AWS-provided ECS-optimized AMIs is recommended as they come pre-configured.

- Scaling: Managing the number of EC2 instances in your cluster. While ECS handles placing tasks onto these instances, you need to scale the instance fleet itself, either manually or using EC2 Auto Scaling Groups, to match workload demands.

- Patching & Updates: Keeping the operating system on your EC2 instances patched and the ECS container agent software up-to-date.

- Security: Configuring instance-level security, such as EC2 security groups, managing SSH access, and hardening the host OS.

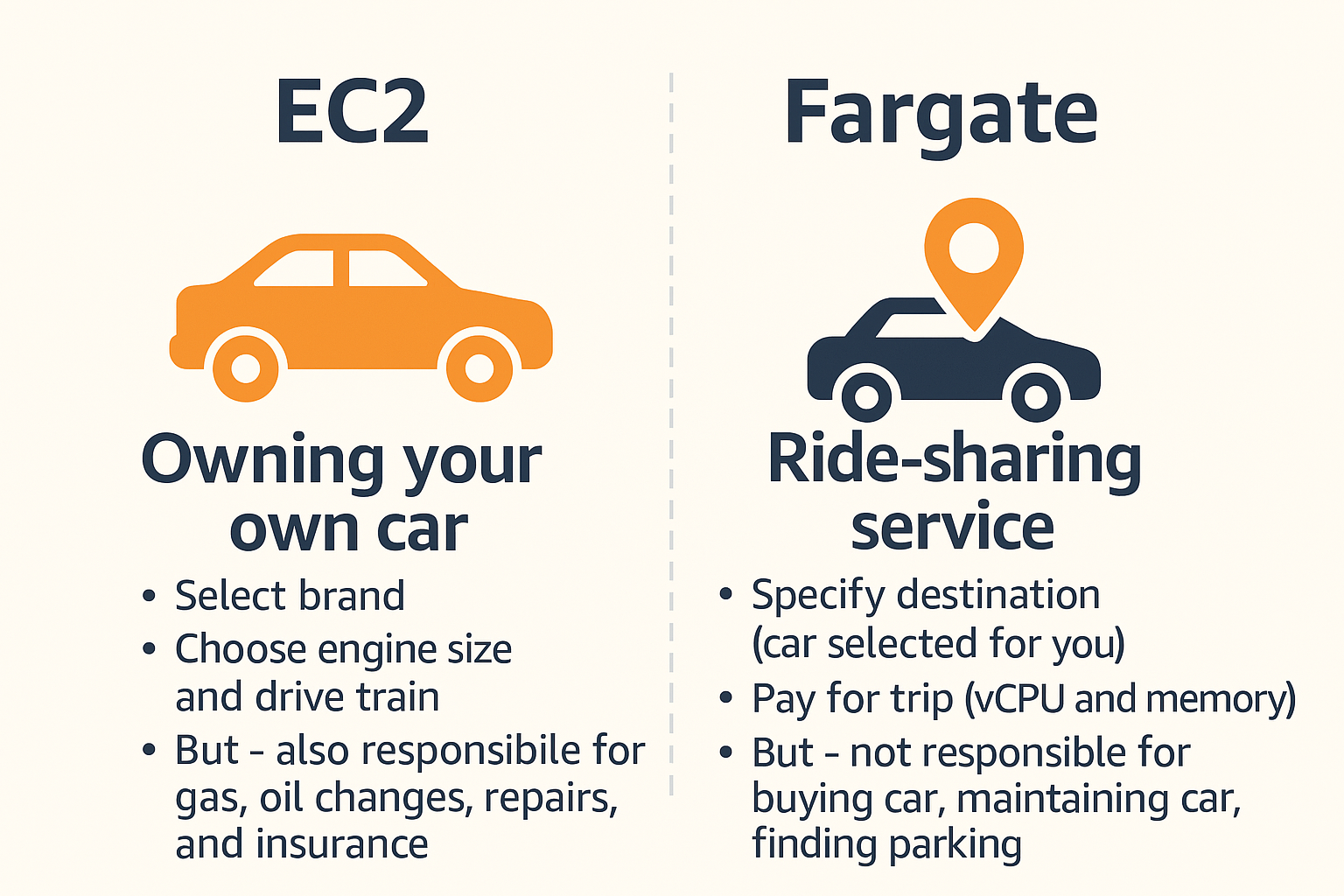

Think of the EC2 launch type like owning and maintaining your own car. You have complete control over it, you can modify it, choose the engine size, but you're also responsible for gas, oil changes, repairs, and insurance.

B. ECS on Fargate: The Serverless Route

AWS Fargate offers a fundamentally different approach. It's a serverless compute engine specifically designed for containers. With Fargate, you define your application's needs in the Task Definition (CPU, memory, container image) and tell ECS to run it using the Fargate launch type. Fargate then provisions and manages the necessary underlying compute infrastructure to run your containers, without you ever needing to interact with or even see the servers.

AWS handles the "undifferentiated heavy lifting":

- Server Management: No instances to provision, patch, or secure.

- Scaling Infrastructure: Fargate automatically scales the underlying capacity needed to run your tasks.

- Isolation: Each Fargate task runs within its own isolated boundary, not sharing the underlying kernel, CPU, memory, or network interface with other tasks, enhancing security.

Think of Fargate like using a ride-sharing service. You specify your destination (your application requirements) and pay for the trip (the vCPU and memory used). You don't worry about buying the car, maintaining it, or finding parking; the service handles all that for you.

Head-to-Head: EC2 vs. Fargate Showdown

Let's break down the comparison across key areas.

A. Infrastructure Management

- EC2: The defining characteristic is user responsibility for the EC2 fleet. This includes patching the OS, updating the ECS agent, scaling the number of instances (distinct from scaling tasks on the instances), selecting instance types, and securing the hosts. This translates directly to operational overhead – time and effort spent by your team.

- Fargate: AWS abstracts away all underlying infrastructure management. You don't worry about patching servers, managing capacity, or updating agents. This significantly reduces operational burden.

The fundamental trade-off is clear: Fargate offers operational simplicity by taking over infrastructure management, but this comes at the cost of losing direct control over that infrastructure. EC2 retains that control but requires you to perform the management tasks. Choosing between them often hinges on how much your team values reducing operational tasks versus needing fine-grained infrastructure control.

B. Control & Flexibility

- EC2: This is where EC2 shines. You have maximum control. You can choose from the vast catalog of EC2 instance types, including those optimized for compute, memory, or equipped with GPUs for specialized workloads like machine learning. You get full operating system access, allowing you to use custom AMIs, install specific software, or tweak kernel parameters. Direct host access is possible if needed. EC2 supports a wider range of task definition parameters and Docker features, including different networking modes.

- Fargate: Control is intentionally limited in favor of simplicity. AWS manages the instances, so you don't choose specific types; instead, you select from predefined combinations of vCPU and memory for your tasks. There's no access to the underlying OS or host environment. Critically, Fargate does not support GPU instances. Several task definition parameters available in EC2 are unsupported or restricted in Fargate, such as running privileged containers, using host networking, or certain Linux parameters.

If your application demands specific hardware (like GPUs), requires OS-level customization, or relies on Docker features not supported by Fargate, EC2 is the necessary choice. Fargate's serverless nature requires abstracting away these granular controls, making it less flexible but easier to manage for standard applications.

C. Pricing Models

- EC2: You pay for the EC2 instances you launch, typically billed per second or per hour (with a 60-second minimum). The cost depends on the instance type, size, and region. A key aspect is that you pay for the entire instance capacity for as long as it's running, regardless of whether your containers are fully utilizing it. Significant cost savings are possible for predictable workloads using Reserved Instances (RIs) or Savings Plans (SPs), offering discounts up to 72% for 1- or 3-year commitments. Spot Instances offer even deeper discounts (up to 90%) for fault-tolerant workloads. Standard data transfer costs also apply.

- Fargate: Pricing is based on the vCPU and memory resources requested by your task, billed per second (with a 1-minute minimum for Linux, 5-minute for Windows) from image pull start to task termination. This means you pay only for the resources your application actually requests and consumes. Fargate Spot provides discounts up to 70% for interruptible tasks, and Compute Savings Plans offer up to 50% savings for commitment. There's also a charge for ephemeral storage configured beyond the default 20GB per task.

The cost comparison isn't always straightforward. Fargate's per-task pricing aligns costs directly with application resource requests, making it potentially cheaper for workloads with low or highly variable utilization, as you avoid paying for idle EC2 capacity. However, Fargate's raw compute cost per vCPU/GB is generally higher than EC2's on-demand rates. For workloads with high, consistent utilization (e.g., consistently above 70-80% of instance capacity), EC2 often becomes more cost-effective, especially when leveraging the deeper discounts available through RIs or SPs. Achieving high utilization on EC2 requires careful "bin packing" (efficiently placing tasks onto instances), which adds operational complexity. This operational effort might make Fargate's higher compute price acceptable for teams prioritizing simplicity.

D. Networking Nuances

- EC2: Offers flexibility with support for multiple Docker network modes: bridge (common for Linux), host (direct host network access), awsvpc (task-specific ENI), and none for Linux; default (NAT) and awsvpc for Windows. While flexible, modes like host can lead to port conflicts, and bridge might introduce minor performance overhead compared to awsvpc or host.

- Fargate: Mandates the use of the awsvpc network mode. In this mode, every Fargate task gets its own Elastic Network Interface (ENI) and an IP address within your VPC. This approach simplifies networking significantly, as tasks behave much like standard EC2 instances within the VPC. It allows you to apply Security Groups directly at the task level for granular traffic control and enables the use of VPC Flow Logs for task-level network monitoring.

The awsvpc mode (used by Fargate and recommended for EC2) means each task consumes an ENI and an IP address. EC2 instances have limits on the number of ENIs they can support, potentially restricting task density (though ENI trunking can increase this limit on supported Linux instances). With Fargate, where every task gets an ENI, careful planning of your VPC's IP address space is crucial, especially at scale.

Fargate standardizes on the awsvpc mode, enforcing modern networking best practices (task-level security, direct VPC integration) but removing the flexibility of other modes. EC2 offers choices, but even there, awsvpc is generally the recommended approach for new applications. The main practical implication of awsvpc is the need for adequate IP address planning within your VPC.

E. Ease of Use & Deployment Speed

- EC2: Requires more initial setup and ongoing management effort. You need to provision instances, configure them, set up scaling, and handle maintenance. The learning curve can be steeper. While the initial launch of EC2 instances takes a few minutes, subsequent task deployments onto existing, warm instances can be faster if container images are already cached locally on the instance disk.

- Fargate: Offers a significantly easier setup and faster path to getting containers running. The management overhead is much lower. Fargate tasks typically launch relatively quickly (often cited around 30-45 seconds), but a key factor is that Fargate must pull the container image from the registry every time a new task starts, as there's no persistent host for caching. This image pull can become a bottleneck, especially for large images. AWS has introduced Seekable OCI (SOCI) index support to mitigate this by enabling faster container startup through lazy loading of images. Fargate scaling is also conceptually simpler, as it operates directly at the task level without needing separate instance scaling.

Fargate clearly wins on ease of use and speed to initial deployment, making it attractive for teams prioritizing simplicity and rapid iteration. EC2 requires more upfront work but can potentially offer faster scaling or deployment times for tasks if the underlying infrastructure is already provisioned, warmed up, and has images cached. Fargate's mandatory image pull remains a consideration for latency-sensitive scaling or deployments involving large images, though SOCI aims to improve this.

When to Choose ECS over Fargate: The Control Freak's Choice

The EC2 launch type is the right path when your requirements demand deep control over the environment or involve capabilities that Fargate simply doesn't provide. Consider EC2 if:

- You Need GPU Acceleration: Your workloads involve machine learning training, scientific simulations, video rendering, or anything else that benefits significantly from GPU hardware. EC2 offers GPU-equipped instance types; Fargate does not.

- Strict Compliance or Licensing Needs: You need to meet specific regulatory requirements that mandate dedicated physical servers (using EC2 Dedicated Hosts) or need to use existing software licenses tied to specific hardware (BYOL on Dedicated Hosts).

- Full OS/Instance Control is Mandatory: Your application requires a specific operating system customization, kernel module, host-level monitoring agent, or direct access to the underlying instance for troubleshooting or specific configurations.

- Cost Optimization for Predictable High Load: You run applications with very high, steady-state resource utilization and can commit to Reserved Instances or Savings Plans. By carefully selecting instances and maximizing their usage ("bin packing"), EC2 can offer a lower overall compute cost in these scenarios.

- You Rely on Unsupported Fargate Features: Your containers need specific Docker capabilities (like privileged mode) or networking modes (host, bridge) that Fargate doesn't support.26

When Fargate is Your Bet (Over ECS): Simplicity with Controlled Capabilities

Fargate shines when the primary goal is to minimize operational complexity and accelerate development, letting AWS handle the infrastructure heavy lifting. Fargate is often the better choice when:

- Simplifying Operations is Key: You want to drastically reduce the time spent managing servers – patching, scaling instances, updating agents. Fargate's serverless nature frees up your team to focus on application code. This is especially valuable for smaller teams or organizations prioritizing speed.

- Running Microservices: Fargate makes it easy to deploy and scale numerous small, independent services without the complexity of managing underlying cluster capacity or worrying about instance bin packing.

- Executing Batch Jobs: Ideal for running scheduled or event-triggered tasks that perform a job and then terminate. You don't need to keep infrastructure running just for intermittent batch processing.

- Handling Variable or Unpredictable Loads: Fargate automatically scales the compute resources based on the number of tasks you need, and its pay-per-task pricing model aligns well with fluctuating demand, avoiding costs for idle capacity.

- Deploying Standard Web Apps & APIs: For common web applications or backend APIs that don't have exotic infrastructure requirements, Fargate provides a fast and simple deployment path.

Enter Kapstan: Flexibility for Control Freaks, But The Simplicity of Fargate

Kapstan offers a modern alternative that combines the flexibility of EC2 with the simplicity of Fargate—without compromising on control or ease of use. Instead of forcing a trade-off, Kapstan provisions and manages a production-grade EKS (Elastic Kubernetes Service) environment directly inside your own AWS account. This means you retain full ownership and visibility of your infrastructure, including the underlying EC2 instances, while Kapstan automates the heavy lifting behind the scenes.

With Kapstan, you get:

- EC2-level control over compute, networking, and autoscaling policies

- Kubernetes-native workflows, giving your team access to the broader cloud-native ecosystem

- Zero infrastructure management—provisioning, patching, node scaling, and security updates are all handled for you

- One-click migrations from ECS, Beanstalk, or Fargate to EKS

- No vendor lock-in since everything runs inside your AWS account

Whether you’re migrating from legacy platforms or scaling new workloads, Kapstan makes Kubernetes feel as effortless as Fargate—while giving you the power and extensibility of a fully managed EC2-backed EKS cluster.

Conclusion: ECS vs Fargate - Making Your Choice

Choosing between ECS on EC2 and ECS on Fargate boils down to a fundamental trade-off: Control vs. Simplicity.

- EC2 offers maximum control, flexibility (especially for specialized hardware like GPUs), and potentially lower costs for highly utilized, predictable workloads (when using RIs/SPs). However, this comes with the responsibility and operational overhead of managing the underlying EC2 instances.

- Fargate provides serverless simplicity, drastically reducing operational burden and allowing teams to focus purely on their applications. It excels with microservices, batch jobs, and variable workloads. The trade-off is less control, no GPU support, and potentially higher costs than optimized EC2 for sustained high loads.

But what if you want the best of both worlds – the control and flexibility often associated with EC2, but without the heavy lifting of managing the infrastructure yourself? That's where platforms like Kapstan enter the picture. Kapstan, for instance, takes an interesting approach by deploying and fully managing an AWS EKS (Elastic Kubernetes Service) control plane along with the underlying EC2 host instances within your own AWS account. This aims to give you the flexibility and power that comes with EC2 and the rich Kubernetes ecosystem via EKS , but wraps it in a managed service layer, handling the operational tasks like provisioning, scaling, and security updates for you. It's a different model that tries to bridge the gap between the direct control of EC2 and the serverless model of Fargate.