Amazon’s Elastic Container service is an ideal choice for startups and growing organizations for its smooth integration with AWS and ease of use. As the application's user base grows, it becomes clear that multi-cloud capabilities are necessary. Furthermore, portability and access to a vast ecosystem of Kubernetes-native tools are also essential, as they provide the advantage of a more flexible and extensible container orchestration platform. To meet these demands, Migrating to Amazon EKS offers an effective solution.

In this blog, we will go through the challenges of scaling workloads in ECS and understand how an EKS migration provides scalability and the flexible ecosystem required for dynamic applications. Let’s dive into the key considerations, benefits, and steps.

Glossary

- ALB – A load balancer that operates at the application layer, i.e. HTTP/HTTPS.

- NLB – A load balancer that works at the network layer, i.e. TCP/UDP.

- Pods – The most minor deployable units in Kubernetes, containing one or more containers.

- Deployments – Kubernetes objects that manage and update Pods.

- Services – A Kubernetes abstraction that defines how to access Pods.

- Helm – A package manager for Kubernetes, simplifying deployment and management of applications.

- Kubectl – A command-line tool for interacting with Kubernetes clusters.

- Eksctl – A command-line tool for creating and managing AWS EKS clusters.

- VPC – A virtual private cloud in AWS that provides networking isolation.

- CloudWatch – A monitoring service for AWS resources and applications.

- IAM – Identity and Access Management in AWS, controlling user and resource permissions.

- NGINX – A web server and reverse proxy server commonly used in Kubernetes for load balancing.

- Persistent Volume – A storage resource in Kubernetes that persists beyond pod lifetimes.

- Elastic File System – A scalable file storage service for AWS cloud resources.

- S3 – A scalable object storage service for storing and retrieving data in AWS.

- KEDA – An event-driven autoscaler for Kubernetes, scaling pods based on external event sources or metrics.

Key Differences Between ECS and EKS

Both ECS and EKS are container management services within AWS. However, they differ in functionality and operation. Let’s delve into each service to understand its unique features.

Elastic Container Service

Elastic Container Service is a fully managed container service by AWS that simplifies running Docker containers on the AWS infrastructure by taking care of much of the underlying complexity. It tightly integrates with AWS services like IAM, CloudWatch, and VPC for resource management. ECS offers two deployment options:

- The first one being the EC2 launch type, where you provision and manage the underlying EC2 instances for your containers,

- The second one is the Fargate launch type, where AWS handles the infrastructure, allowing you to focus solely on your containerized applications.

ECS offers straightforward scaling mechanisms, mainly through the EC2 launch type, where you can manually scale instances or use AWS Auto Scaling for automated scaling. While ECS supports auto-scaling with Fargate, the process is more straightforward and less granular than EKS. ECS is tightly integrated with the AWS ecosystem, leveraging native services like CloudWatch, IAM, and AWS Load Balancers for monitoring, security, and networking. This tight integration simplifies management but may limit flexibility when incorporating third-party tools.

Elastic Kubernetes Service

Elastic Kubernetes Service (EKS) is AWS’s managed Kubernetes service, providing the flexibility and scalability of Kubernetes without the complexity of managing the control plane. Compared to ECS, Kubernetes is a more complex orchestration platform that offers greater flexibility and scalability. With EKS, AWS manages the Kubernetes control plane, but users need to manage worker nodes defined on EC2 instances or with AWS Fargate. Kubernetes, through EKS, allows for advanced features like multi-cluster management, auto-scaling, and robust networking capabilities within the AWS VPC and integration with AWS services.

EKS, on the other hand, provides more advanced scaling capabilities. It supports Kubernetes' Horizontal Pod Autoscaler and Cluster Autoscaler, allowing for fine-grained scaling control at the container and pod levels. Kubernetes manages the horizontal scaling of pods, complex deployment strategies, and rolling updates while supporting hybrid scaling across multiple cloud providers. EKS integrates well with the AWS ecosystem, offering compatibility with CloudWatch, IAM, and AWS Load Balancers. However, its foundation in Kubernetes adds significant flexibility, enabling seamless integration with third-party tools such as Prometheus, Grafana, and others. This makes EKS a versatile choice for organizations with complex architectures requiring robust monitoring, logging, and CI/CD capabilities.

Why Consider Migrating from ECS to EKS?

Migrating from Amazon Elastic Container Service (ECS) to Amazon Elastic Kubernetes Service (EKS) is beneficial when your organization requires:

- Advanced Features: Such as cross-cluster management and sophisticated scaling options.

- Multi-Cloud Compatibility: The ability to run workloads across different cloud providers.

- Access to Kubernetes Ecosystem: Leverage various third-party tools and plugins.

- Greater Flexibility and Control: Ideal for teams familiar with Kubernetes needing more customization.

Consider an organization that has deployed its microservices-based retail application on ECS. Initially, ECS meets their needs during periods of manageable traffic. However, as the company grows, they encounter several challenges:

- Increased Scaling Requirements: Higher traffic volumes need to be handled efficiently.

- Rigid Resource Allocations: Limited flexibility in resource management.

- Inefficient Autoscaling: ECS’s built-in autoscaling features may not efficiently handle custom metrics or event-based scaling, such as:

- Number of active users.

- Queue depth in Amazon SQS.

- Specific load events.

To address these challenges, they can consider migrating from ECS to EKS. In this instance, switching to EKS offers the dependability and user-friendliness of AWS’s infrastructure while improving the organization’s capacity to grow, standardizing tools, and enhancing Kubernetes capabilities. Utilizing Kubernetes’ Horizontal Pod Autoscaler in conjunction with KEDA’s other features in EKS enables more comprehensive solutions for these elastic scaling needs. Additionally, the team may want to use Kubernetes to unify tooling and deployment pipelines across environments. For example, companies can utilize Istio to manage traffic, Prometheus for in-depth monitoring, and Helm templates to ensure templates are used, all better supported in a Kubernetes environment. The team continues to use AWS’s managed services capabilities whether they choose to stay on AWS with EKS or find another solution.

Benefits of Kubernetes for Orchestration

Kubernetes offers several advantages over simpler orchestration platforms like ECS, making it suitable for managing complex and large-scale applications.

- Self-healing: Kubernetes automatically replaces failed containers, restarts them in case of crashes, and reschedules them on healthy nodes if a node becomes unavailable. This ensures application stability with minimal manual intervention.

- Complex deployment strategies: Kubernetes supports advanced deployment techniques such as rolling updates, blue-green deployments, and canary releases. These allow gradual rollout of updates, reducing downtime and minimizing risks during updates.

- Service discovery and networking: Kubernetes provides built-in service discovery through DNS-based mechanisms and automatically assigns IPs to Pods. Services can communicate seamlessly within the cluster without manual configuration.

- Enhanced load balancing: Kubernetes uses Services to distribute incoming traffic evenly across Pods, ensuring high availability and efficient resource utilization. It can also integrate with external load balancers to manage traffic at scale.

- Fine-grained control over scaling: Kubernetes supports horizontal pod autoscaling, where Pods are scaled based on metrics like CPU or memory usage. It also supports vertical scaling by adjusting resource allocations dynamically and cluster autoscaling to handle workload spikes.

- Community support and ecosystem: Kubernetes has a vast open-source community that continuously innovates and provides extensive tools and extensions. Features like Helm charts, Prometheus monitoring, and Istio for service meshes make Kubernetes a highly customizable platform for varied use cases.

Prerequisites for Migration

Before migrating from ECS to EKS, it’s essential to identify and address several prerequisites to ensure a seamless transition.

- Start by assessing current ECS workloads, including task definitions, service configurations, scaling policies, and dependencies, to understand how they translate to Kubernetes.

- This involves analyzing resource requirements, application dependencies, and any custom configurations that might need adjustments in EKS.

- Additionally, prepare Kubernetes manifests or Helm charts for your applications.

- This step involves translating ECS task definitions and services into Kubernetes-native resources such as Deployments, Services, ConfigMaps, and Secrets. Helm charts can simplify application deployment and management, especially for complex workloads.

Step-by-Step ECS to EKS Migration Plan

Migrating from Amazon ECS to EKS requires careful planning and execution. This section outlines a step-by-step guide to help you transition smoothly and efficiently.

Step 1: Set Up the EKS Cluster

To begin working with Amazon Elastic Kubernetes Service (EKS), you have two main scenarios, either using an existing Kubernetes cluster or creating a new one. For this demo, we will focus on creating a new EKS cluster. You can do this through Terraform, the AWS Management Console, or AWS CloudFormation Templates. For this demo, we will be working with Terraform as it provides versioning and ease of management for infrastructure.

Here is an example configuration file for creating your own EKS cluster. Remember to have the AWS CLI tool installed and configured to your account. For more information, check out the official AWS documentation.

# Providerprovider "aws" {# VPC

region = "us-east-1"

}module "vpc" {# EKS Cluster

source = "terraform-aws-modules/vpc/aws"

version = "~> 4.0"

name = "eks-vpc"

cidr = "10.0.0.0/16"

azs = ["us-east-1a", "us-east-1b"]

private_subnets = ["10.0.1.0/24", "10.0.2.0/24"]

public_subnets = ["10.0.101.0/24", "10.0.102.0/24"]

enable_nat_gateway = true

single_nat_gateway = true

enable_dns_hostnames = true

enable_dns_support = true

tags = {

Terraform = "true"

Environment = "dev"

}

}module "eks" { # Add the security group to the cluster

source = "terraform-aws-modules/eks/aws"

version = "~> 19.0"

cluster_name = "my-eks-cluster"

cluster_version = "1.24"

cluster_endpoint_private_access = true

cluster_endpoint_public_access = true

vpc_id = module.vpc.vpc_id

subnet_ids = module.vpc.private_subnets

enable_irsa = true cluster_security_group_additional_rules = {

ingress_http = {

description = "HTTP ingress"

protocol = "tcp"

from_port = 80

to_port = 80

cidr_blocks = ["0.0.0.0/0"]

type = "ingress"

}

ingress_https = {

description = "HTTPS ingress"

protocol = "tcp"

from_port = 443

to_port = 443

cidr_blocks = ["0.0.0.0/0"]

type = "ingress"

}

}

# Add the security group to the node groups node_security_group_additional_rules = {

ingress_self_all = {

description = "Node to node all ports/protocols"

protocol = "-1"

from_port = 0

to_port = 0

type = "ingress"

self = true

}

ingress_https = {

description = "HTTPS ingress"

protocol = "tcp"

from_port = 443

to_port = 443

cidr_blocks = ["0.0.0.0/0"]

type = "ingress"

}

egress_all = {

description = "Node all egress"

protocol = "-1"

from_port = 0

to_port = 0

type = "egress"

cidr_blocks = ["0.0.0.0/0"]

}

}

# Node Group eks_managed_node_group_defaults = {

disk_size = 50

}

eks_managed_node_groups = {

general = {

desired_size = 1

min_size = 1

max_size = 1

instance_types = ["t3.small"]

capacity_type = "SPOT"

}

}

}

# Outputsoutput "cluster_endpoint" {

description = "Endpoint for EKS control plane"

value = module.eks.cluster_endpoint

}

output "cluster_security_group_id" {

description = "Security group ID attached to the EKS cluster"

value = module.eks.cluster_security_group_id

}

output "cluster_name" {

description = "Kubernetes Cluster Name"

value = module. eks.cluster_name

}

This configuration defines the AWS provider and creates a Virtual Private Cloud using the terraform-aws-modules/vpc module, with public and private subnets, NAT gateway, and DNS settings. The terraform-aws-modules/eks module is then used to create an EKS cluster named my-eks-cluster with Kubernetes version 1.24. The cluster is configured to support private and public endpoints, and “IAM Roles for Service Accounts” is enabled for fine-grained permissions. Additional security group rules are defined for the cluster and its managed node groups, allowing ingress for HTTP/HTTPS traffic and ensuring node-to-node communication. A single managed node group with one t3.small SPOT instance is created. Finally, outputs provide the cluster endpoint, security group ID, and cluster name, making it easy to reference this setup in other parts of the infrastructure.

Use the following Terraform commands to run the Terraform config file:

terraform init && terraform apply

You can check your AWS Cloud Console to verify the creation of the cluster:

After creating the EKS cluster, configure the kubeconfig file to enable kubectl to connect to the cluster. You can do this using the AWS CLI:

aws eks update-kubeconfig --region <region> --name <cluster-name>

Step 2: Rewrite task definition files into Kubernetes Deployment manifests

Convert your ECS task definitions into Kubernetes Deployment manifests. This will involve creating Kubernetes YAML files for pods, deployments, and services and replacing the ECS definitions. Key migration elements include environment variables, port mappings, and resource allocations such as CPU and memory.

For instance, the ECS Task definition for the NGINX container might look like this:

{

"family": "webserver",

"containerDefinitions": [

{

"name": "web",

"image": "nginx",

"memory": 100,

"cpu": 99,

"essential": true,

"portMappings": [

{

"containerPort": 80,

"protocol": "tcp"

}

]

}

],

"requiresCompatibilities": [

"FARGATE"

],

"networkMode": "awsvpc",

"memory": "512",

"cpu": "256"

}

Let’s see an example of how we can convert it into a single NGINX kubernetes manifest file:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

type: LoadBalancer

selector:

app: nginx

ports:

- port: 80

targetPort: 80

This Kubernetes manifest file defines a Kubernetes Deployment and a Service for deploying an NGINX web server application. The Deployment specifies a single replica of an NGINX container running the latest image, exposing port 80 for HTTP traffic. It includes a selector to match pods with the label nginx, ensuring that the deployment's pods are appropriately identified. The Service of type LoadBalancer exposes the NGINX application to external traffic by creating a load balancer in the underlying infrastructure. It forwards traffic from port 80 on the load balancer to port 80 on the NGINX container. It uses the same app selector to route traffic to the correct pods, making the application accessible to users over the internet.

Now, we apply this new manifest file to the EKS cluster:

kubectl apply -f nginx-manifest.yamlTo get the newly created deployments, run this command:

kubectl get deployStep 3: Networking and Load Balancing

In Amazon EKS, configuring Kubernetes Ingress controllers and Service resources is essential to load balance traffic and manage external access to your applications. Implementing load-balancing HTTP traffic is crucial for setting up a robust EKS cluster. The Kubernetes Service of type LoadBalancer automatically provisions an AWS Load Balancer, which can either be a Classic Load Balancer, Application Load Balancer, or Network Load Balancer. The Load Balancer will associate it with your service, directing traffic to the appropriate pods. Kubernetes Ingress resources allow for advanced routing to specific services, such as path-based or host-based. Ingress controllers like the AWS Load Balancer Controller integrate these resources with AWS ALBs and NLBs, enabling features like SSL termination.

Setting Up AWS Load Balancer Controller for EKS:

The AWS Load Balancer Controller must be installed on your EKS cluster to automate the integration of Kubernetes services with AWS load balancers. This controller supports creating and managing Application and Network Load Balancers directly from Kubernetes Ingress and Service resources of type LoadBalancer. The controller helps in the load balancers' automatic provisioning, configuration, and lifecycle management, reducing manual intervention. Installing the controller lets you define Kubernetes Ingress resources that automatically create and configure ALBs with routing rules based on the defined services and paths. This enables seamless scaling, routing, and secure access management for your Kubernetes applications hosted on EKS.

Step 4: Data and Storage Migration

Migrating data volumes from ECS to EKS involves transitioning from ECS-specific storage configurations to Kubernetes' native Persistent Volumes and Persistent Volume Claims. Suppose your ECS workloads use Amazon Elastic File System for shared file storage. In that case, you can migrate the data by creating a Kubernetes Persistent Volume that points to the EFS file system. Then, you can define a Persistent Volume Claim for each application that needs access to that data. The PVC is an abstraction, ensuring that applications are correctly linked to the EFS storage without manually configuring access for each pod.

For applications using Amazon S3 for object storage, the migration involves configuring Kubernetes applications to access S3 directly via AWS SDKs or using services like Amazon S3 CSI driver for Kubernetes. Alternatively, you can set up a shared storage layer or a cache in Kubernetes that interacts with S3, making the transition smoother for applications that depend on object storage.

Managing Stateful Workloads During Migration:

Migrating stateful workloads such as databases, message queues, and other persistent applications requires special attention. In Kubernetes, stateful applications are typically managed using StatefulSets, which provide stable, persistent storage, predictable pod names, and network identities. When migrating stateful workloads, ensure that data migration occurs during off-peak hours to minimize disruption to the production environment. For databases, consider using tools like Velero for backup and restore operations to ensure consistency and reduce downtime. Additionally, thorough testing of stateful workloads post-migration is necessary to verify data integrity, performance, and availability in the new environment.

Step 5: Testing and Validation

Before migrating fully to EKS, it’s crucial to validate that all workloads are functioning as expected in the new environment. This involves checking if deployments have been successfully executed, pods are in a healthy state and are not producing a CrashLoopBackOff error, and services are accessible internally and externally. You can use kubectl get pods and kubectl get services to check the status of the pods and the services. Ensure that the workloads have the correct resource requests and limits and that all Kubernetes resources like ConfigMaps and Secrets are correctly configured. This validation phase helps catch configuration issues early and ensures a smooth migration experience. For example, this is the status for the deployed NGINX pod on the Kubernetes cluster:

Running Load and Integration Tests:

Once workloads are deployed to EKS, it’s essential to perform load and integration testing to ensure the cluster can handle the expected traffic and that the applications function correctly under realistic conditions. Tools like Apache JMeter, k6, or Artillery can be used for running performance and stress tests. These tests help validate that your application can scale properly under load and that the Kubernetes cluster is configured with appropriate resource limits to handle spikes in traffic. Running integration tests ensures that different components of the application interact correctly, such as API calls between services, database connectivity, and external integrations.

Step 6: Cutover to EKS

When migrating to EKS, the process should be iterative, and there shouldn’t be any unnecessary risk. Start by moving a small fraction of the traffic for some services hosted on ECS to corresponding services on EKS through canary deployments. Routing user traffic gradually ensures that service availability is maintained and traffic can be monitored as it increases during the rollout process. Some users get to use the EKS application, while a majority of the traffic still uses ECS. Performance metrics and logs should be watched during this stage to resolve issues as they occur. Transitioning into EKS-based environments is gradual until all traffic goes to EKS. During the final phase, routing traffic exclusively from the CloudFront Staging Distribution to Production Distribution helps manage user traffic more efficiently.

Decommissioning ECS Resources:

Once all traffic has been successfully migrated to EKS and the Kubernetes environment is stable, start decommissioning ECS resources. This includes stopping ECS services, removing task definitions, and eventually deleting ECS clusters. This final step should only be performed once you are confident that the EKS environment is fully operational and there are no outstanding issues. Keeping backups and performing verification checks is essential to ensure all data and configurations are successfully migrated to Kubernetes before decommissioning ECS resources. This ensures that there is no data loss or service disruption during the final phase of the migration.

Common Challenges During Migration

Migrating workloads from ECS to EKS presents several challenges that the organizations must address to ensure a smooth transition.

Resource Mapping Discrepancies

One significant hurdle is managing resource mapping discrepancies between the two platforms, as ECS and EKS handle services, scaling, and tasks differently, often requiring manual adjustments. In ECS, services are tightly integrated with AWS-specific constructs like target groups, task definitions, and service discovery, while EKS relies on Kubernetes constructs such as Deployments, Services, and ConfigMaps. These differences mean that tasks and services in ECS may need to be restructured to fit into Kubernetes’ pod-based architecture.

Scaling Differences

Scaling in ECS is managed through Service Auto Scaling policies, whereas EKS leverages Kubernetes-native mechanisms like the Horizontal Pod Autoscaler and Cluster Autoscaler, which require different configurations and monitoring tools. As a result, teams must often translate ECS-specific configurations into Kubernetes equivalents, which can be both time-intensive and error-prone without proper planning and tooling.

Reconfiguration

As discussed above, Kubernetes introduces new concepts, such as ConfigMaps and Secrets, which may require reconfiguring how application settings, credentials, and environment variables are managed. Handling dependencies minimizes disruptions and ensures the application operates efficiently in the new cluster, leveraging Kubernetes-native tools and practices.

IAM Permissions

Another critical aspect is handling IAM permission differences. EKS uses Kubernetes' role-based access control or RBAC model, which may not directly align with ECS's IAM setup, necessitating careful policy revisions.

Failing to address these differences can lead to overly permissive access, posing security risks, or insufficient permissions, causing application failures. Teams must also ensure that all workloads have appropriate access to required AWS services like S3, DynamoDB, or RDS, and this often involves refactoring IAM roles to align with Kubernetes’ pod-based model.

Cluster State Drift

This can further complicate migration by creating inconsistencies in application configurations, making it difficult to maintain stability. Additionally, ensuring robust rollback capabilities is vital to recover swiftly from migration failures.

Application Dependencies

Addressing application dependencies and service configurations is essential to serve traffic reliably and maintain functionality, preventing disruptions during the migration process. Dependencies such as databases, external APIs, or other microservices must be carefully mapped and validated to ensure they are accessible and compatible with the EKS environment. This includes verifying networking configurations like service discovery, DNS settings, and ingress/egress rules to maintain seamless communication between components.

Transitioning from ECS to EKS can unlock enhanced scalability, flexibility, and compatibility with Kubernetes-native tools. Organizations can modernize their containerized workloads by leveraging AWS and third-party tools, addressing migration challenges, and planning contingencies. Careful preparation and adoption of best practices ensure a smooth migration journey.

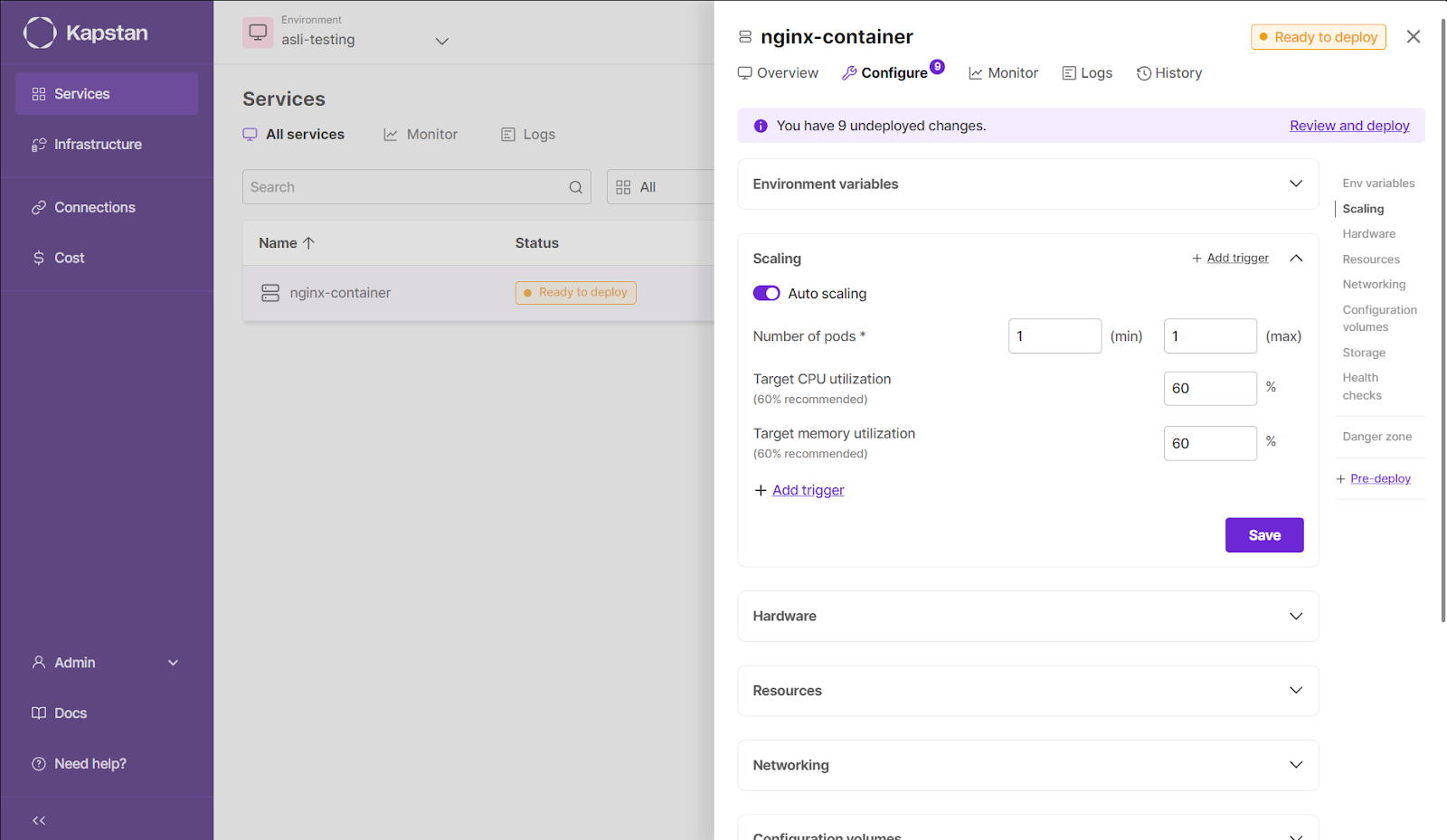

Introduction to Kapstan

With adopting EKS as the standard, organizations leaving AWS ECS must ensure that they adopt the best Kubernetes cluster management techniques. Kapstan provides fairly straightforward methods to manage Kubernetes after the migration by offering an easy-to-manage interface for EKS resources that end users can effectively use.

Kapstan's automated scaling capabilities manage performance and resources economically by modulating them according to the application's real-time needs. It becomes crucial for dynamic applications that load different versions at different times. Further, it makes it easier to update applications since teams can deploy new versions without disrupting service. Also included in this scope is credible secrets management, which ensures that relevant data is encrypted and well-protected.

By leveraging Kapstan's capabilities, organizations can overcome common EKS management challenges, such as manual scaling, complex updates, and security concerns. With Kapstan, EKS clusters can run smoothly, efficiently, and securely, allowing teams to focus on building and deploying innovative applications that drive business success.

Kapstan is a robust tool that streamlines EKS management by bringing automation, visibility, and security. It allows businesses to better their Kubernetes management processes, which helps with better resource usage, cost saving, and application performance. With Kapstan, teams can harness the full capabilities of Kubernetes and shift the focus to innovation and application development.

Conclusion ECS to EKS

Transitioning from ECS to EKS benefits organizations with increased scalability and flexibility in managing their Kubernetes environments. In this blog, we contrasted and compared ECS and EKS, highlighting the benefits and difficulties of switching. Additionally, teams may build their apps in a way that is appropriate for these kinds of situations by utilizing Kubernetes features like self-healing, accurate resource scaling, and many others that are offered by the third-party ecosystem. Issues like resource overlap, IAM policies, and other dependencies can also be gradually resolved through migration. However, after switching to EKS, businesses may take advantage of all the sophisticated capabilities Kubernetes offers for faultless administration of AWS containers.

ECS to EKS FAQs

What are the steps to migrate from ECS to EKS?

- Spin up a new EKS environment

- Rewrite ECS task definition files

- Networking and load balancing

- Migrate data volumes

- Test and validate

- Re-route traffic to EKS cluster

What are the key factors to consider before migrating to EKS?

Evaluate application compatibility with Kubernetes, understand networking requirements, assess IAM policies, and prepare for operational overhead associated with Kubernetes.

How does EKS improve scalability compared to ECS?

EKS leverages Kubernetes' robust scaling mechanisms, such as Horizontal Pod Autoscaler and Cluster Autoscaler, providing finer control over resource allocation and scaling compared to ECS.

Can I use existing ECS container images in EKS?

Yes, existing ECS container images can be used in EKS, as both platforms are compatible with container images stored in Amazon ECR or other Docker registries.

What are the cost implications of migrating to EKS?

While EKS offers cost savings for specific use cases, it can also introduce additional costs for cluster management, node groups, and associated tools. A thorough cost analysis is recommended.

Is there an automated rollback option during migration failures?

While EKS does not provide automated rollback, you can design rollback mechanisms using Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation, ensuring quick recovery to ECS if needed.