Developing and deploying AI applications comes with many unique challenges such as complex resource scaling and clunky developer experience. While it is a well understood concept that cloud-native applications should be able to scale up and down to prevent overspending on compute, this becomes even more imperative for AI applications as you are utilizing expensive hardware such as GPUs. Developers, however, may struggle to ensure their applications support this in a reliable and performant way. This is the exact scenario that Ray Serve attempts to solve, providing a simple and ergonomic Python library that allows developers to create applications centered around AI models that can scale from one node to thousands without requiring complex configurations. In this post we will go through the entire process of getting started with using Ray Serve, from creating your first application all the way to deploying it on a Kubernetes cluster. In a future post we will go over more advanced optimizations to ensure you can create a production-ready setup without the headache.

What is Ray Serve?

Ray is a distributed computing framework primarily oriented around Python applications. It simplifies various aspects of the AI/ML lifecycle, such as training models, fine-tuning parameters and hyperparameters, loading data, and serving models. This post will focus specifically on the last component, which is implemented using the Ray Serve library. When using Ray Serve, creating highly scalable AI applications can be as simple as adding a decorator onto a Python class. From here running your application on your local machine versus running it on a highly distributed compute cluster is a matter of simple configuration, not complex system design. Behind the scenes, the framework creates a control plane that coordinates worker processes to automate request distribution, autoscaling, and self healing. Since your core logic remains plain Python code, it is simple to deploy advanced setups such as an ensemble model at scale without needing to worry about efficiently utilizing compute for each individual model. Ray Serve excels at serving a wide range of AI models, such as object detection, LLM-backed chatbots, and generative AI. Overall, Ray Serve provides an outstanding developer experience that empowers engineers to develop highly performant applications and run them at scale.

How to run Ray Serve?

The current two most popular methods for running Ray Serve applications are either the enterprise Anyscale Platform or using the open source KubeRay Operator for Kubernetes.

Anyscale Platform

Pros:

- Fully hosted and managed

- Receives advanced features before the open source offering

- Can deploy in your own cloud or use a fully hosted platform

Cons:

- Requires a subscription

- Vendor lock in (hosted)

- Minimal control over infrastructure (hosted)

KubeRay

Pros:

- Fully open source and free to use

- No vendor lock in ever

- Full control over infrastructure leading to greater flexibility

- Integrates well with vast Kubernetes ecosystem

Cons:

- Can be complex to manage and debug

- Requires Kubernetes expertise to fully utilize

Overall there are many reasons to choose either option but for this blog we will go with KubeRay since it is open source and provides us full control over our infrastructure. Later on we’ll discuss how a platform like Kapstan can greatly simplify utilizing Kubernetes to the fullest for Ray Serve applications and more.

Creating your First Deployment

For the purpose of this post we will be deploying the bart-large-cnn text summarization model from HuggingFace to highlight how quickly we can create an application that utilizes open source models. The full code for this example, as well as all other code referenced in this post, can be found here.

# Decorator to convert this class into a Ray Serve Deployment

@serve.deployment

class SummaryDeployment:

def __init__(self):

# Initialize the model

self._model = pipeline("summarization", model="facebook/bart-large-cnn")

def __call__(self, request: Request) -> Dict:

# Query the model

return self._model(request.query_params["text"],max_length=130, min_length=30, do_sample=False)

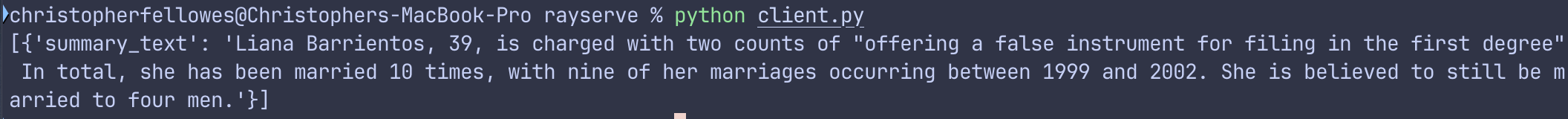

# Creates an instance of the Deployment to be used by the Ray Serve framework

app = SummaryDeployment.bind()With just a handful of lines of code, we have defined an extremely simple AI application that can easily be scaled to hundreds of nodes. By hiding the complexity of distributed compute behind the scenes, Ray Serve allows us to focus on defining application logic in a simple and intuitive way. Here our class loads a model when initialized and passes a text to the model when called. By adding the deployment annotation on line 2 and calling bind() on line 13 the class is ready to be used by the Ray Serve framework. This the application can now be accessed by running serve run main:app and called using python client.py which contains an example article from the HuggingFace docs for this model:

With only a handful of lines of code we already have our first deployment working!

Containerizing your Ray Serve application

Python container images are often fairly large in size due to the uncompiled nature of the code. When working with AI applications this size often increases even further as massive libraries such as PyTorch and TensorFlow, leading to extremely slow build times and poor developer experience. To get around this we can utilize local build caching and efficient layer creation ordering to make it so we can build images locally without needing to wait for minutes every time, read more about how this works here.

Dockerfile

FROM python:3.12-slim

ENV PYTHONDONTWRITEBYTECODE 1

ENV PYTHONUNBUFFERED 1

COPY requirements.txt /tmp/requirements.txt

# Ray Serve uses wget for readiness and liveness probes

RUN apt update && apt install -y wget

RUN pip install --no-cache-dir --upgrade pip setuptools wheel

RUN pip install --no-cache-dir -r /tmp/requirements.txt

WORKDIR /serve_app

ADD main.py .

# No default command, KubeRay Operator will set this during deploymentNow that the image has been built we can test it out and ensure everything is functioning normally:

** note: as of writing this the host network only works on Linux, so this command may not work if you are running on a different OS **

docker run --platform linux/arm64 --network=host ray-blog:latest serve run main:app

python client.py

[{'summary_text': 'Liana Barrientos ...

Deploying on Kubernetes with the KubeRay Operator

For this section you will need a Kubernetes cluster and the Helm CLI. If you do not have a cluster, you can create one on your local machine using Minikube or K3S or spin up a production-ready setup on Kapstan in a few clicks.

The simplest way to install KubeRay on to your cluster is using the official Helm chart. We will skip configuring the chart for this post since the default configuration will be sufficient.

helm repo add kuberay https://ray-project.github.io/kuberay-helm/

helm repo update

helm install kuberay-operator kuberay/kuberay-operator --waitNow that the Operator is running, we can begin working on deploying our Ray Serve container image using the RayService Custom Resource Definition (CRD). This CRD is highly configurable (API reference) but for the initial setup we will make a simple cluster with one Head pod and one Worker pod. An annotated summary of the spec can be seen below, visit the source code on GitHub for the full file:

apiVersion: ray.io/v1alpha1

kind: RayService

metadata:

name: ray-service

namespace: ray-blog

spec:

serveConfigV2: |

applications:

- name: sumary

import_path: main:app

route_prefix: /

rayClusterConfig:

rayVersion: '2.40.0'

headGroupSpec:

rayStartParams:

dashboard-host: '0.0.0.0'

template:

spec:

containers:

- name: ray-head

image: ghcr.io/chrisfellowes-test-org/ray-blog:latest

workerGroupSpecs:

- replicas: 1

minReplicas: 1

maxReplicas: 1

groupName: worker-group

template:

spec:

containers:

- name: ray-worker

image: ghcr.io/chrisfellowes-test-org/ray-blog:latest

lifecycle:

preStop:

exec:

command: ["/bin/sh","-c","ray stop"]

Let’s break down each major component and describe how it works.

serveConfigV2: This section is used to configure how Ray Serve should run our code. We have a very simple setup here that defines our summarization application, where the code can be found, and what prefix to serve this application under on the Serve API. See the full config options here.

rayClusterConfig: This section controls the layout of our Ray Cluster. You can configure the Head Group as well as multiple Worker Groups in order to support complex deployment strategies, but here we have a simple setup with a single worker group. It is important to note that our headGroup is running the same image as our worker group so that they both have access to our Deployment definition code. See the full config options here, or use the Kapstan platform and automatically create performant and highly-available Ray Serve applications with no additional configuration!

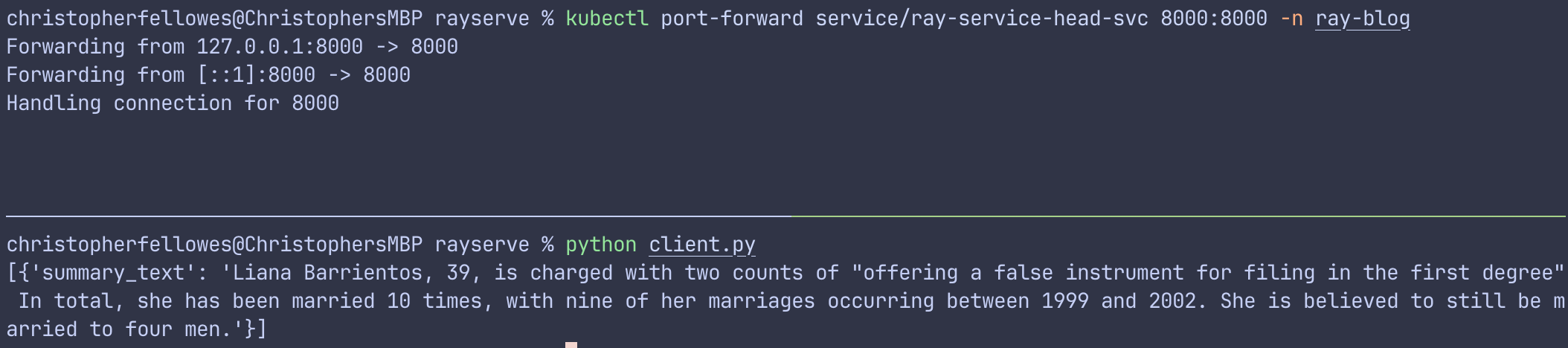

We can now apply our configured CRD and create our first Ray Serve application on Kubernetes. After our RayService CRD has finished spinning up, we can port forward the Serve endpoint to validate the deployment using our client.py file:

We can also use the dashboard to get a full overview of our RayService’s cluster and all deployed Ray Serve applications it is running.

kubectl port-forward service/ray-service-head-svc 8265:8265 -n ray-blog

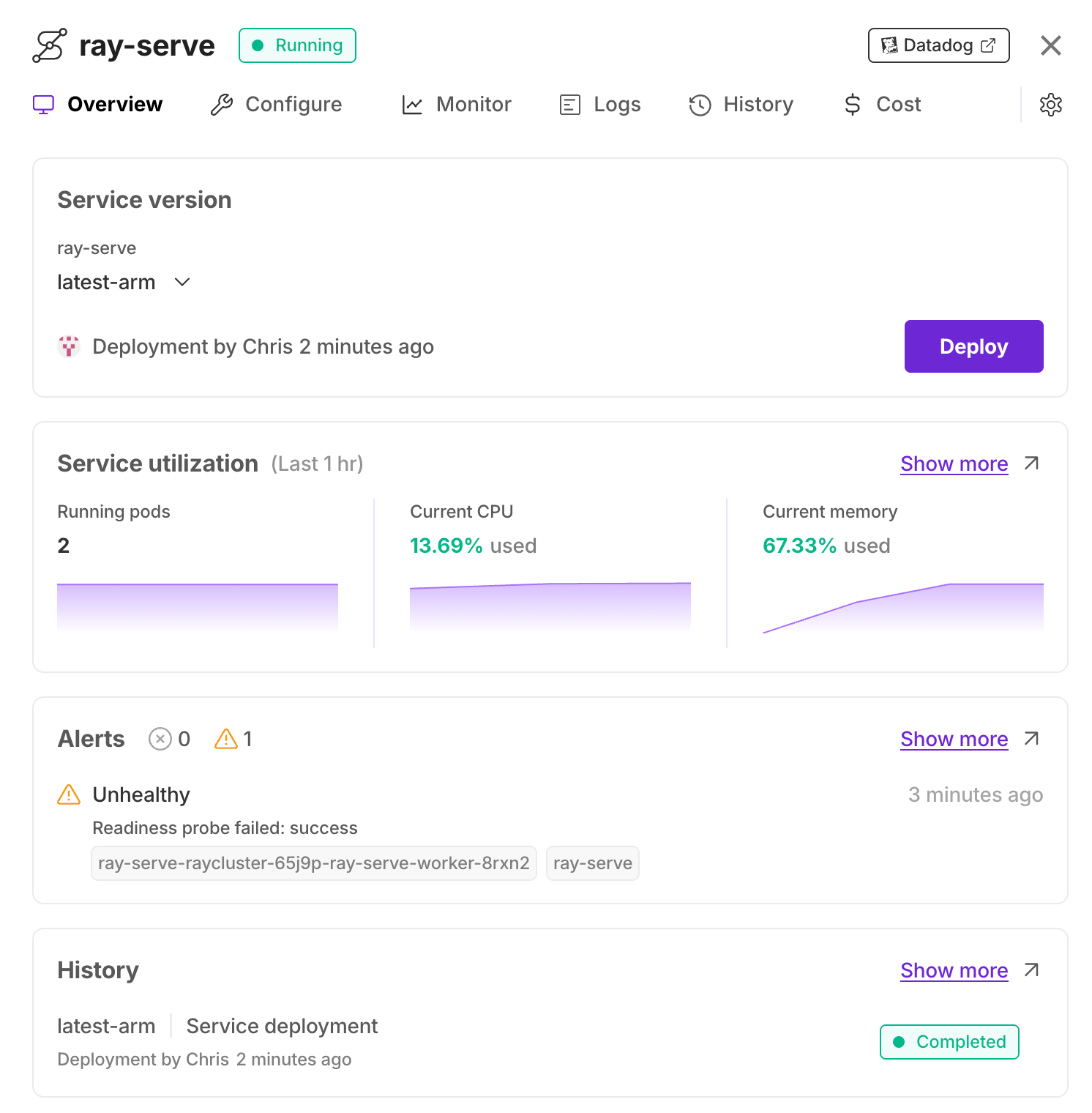

Ray Serve on Kapstan

If you want to harness the full potential of Ray Serve without needing to become a Kubernetes expert, consider using the Kapstan platform to automate these operations behind the scenes. Your deployments will be well-architected and production-grade out of the box, with additional benefits for developer experience like integrated logging, monitoring, and alerting. Creating a Ray Serve application through the Kapstan platform is simple:

- Bring your existing Kubernetes cluster or have the platform provision one in your own cloud account

- Create a Ray Serve application

- Deploy!

Troubleshooting and Tips for Ray Serve

The example so far should give you a solid framework to build your Ray Serve applications on top of, but it is useful to know some tips when things go wrong as well as some common gotcha’s.

- Log Collection: unlike traditional Kubernetes workloads where you can inspect the logs of your pods directly, RayServe applications by default have their logs aggregated into the Head Group pods. You can inspect these in the

/tmp/ray/session_*/logsfilepath, or enable stdout logging by setting theRAY_LOG_TO_STDERRenvironment variable to1in your pod spec. - Class Serialization: in order to support distributed compute, Ray Serve requires that your entire Deployment class is serializable or your worker pods will fail to run it. You can read more about this here.

- Zero-Downtime Upgrades: This CRD allows you to upgrade your Ray Serve application without downtime but there are some subtle differences in how the upgrade occurs depending on what sections are edited. If the

serveConfigV2field is edited your entire cluster (meaning your head and worker pods) will not be recreated or restarted, and the new serveConfig will simply be distributed across your cluster and updated in-place. However, editing yourrayClusterConfigwill spin up a new cluster with the desired configuration, wait for it to pass health checks, and then roll traffic over and clean up your old cluster. Understanding this difference is key to ensuring you do not have any unexpected downtime.

Ray Serve Conclusion

We hope this post has been a useful first step in diving into the Ray Serve framework and has demystified the process of running AI applications on Kubernetes. If you are interested in taking full advantage of all the capabilities this powerful framework has to offer, consider trying the Kapstan platform to accelerate your application development. Keep an eye out for the second part of this series where we cover more complex model orchestration, running a high-availability head group, and incorporating telemetry!